3rd-party tools in Amazon AWS

With the ever-growing need for digital resources, in recent years an increasing number of companies have decided to outsource their IT to a public cloud. As a consequence, the security requirements for protecting your property against external attacks have also increased accordingly. Public cloud providers such as Amazon AWS offer several different options for a needs-based protective set-up within their cloud environments and, in addition to their own firewall portfolio, also include the option of integrating third-party vendor solutions, i.e. next-generation firewalls (NGFW) made available on the cloud platform marketplace.

In this tech blog, we provide a general overview of corporate IT in public cloud environments such as AWS. Therefore, we described the necessities and challenges when deploying 3rd-party tools using the example of the next-generation firewall.

Third-party next-generation firewalls in public clouds

There are two basic usage models for third-party tools in a public cloud environment, the main differences being the features of the selected billing model, invoicing, licensing and application purpose: pay as you go (PAYG) and bring your own license (BYOL).

- PAYG is generally recommended especially for short-term and/or temporary use, for instance in the case of PoCs or to cover temporarily high load peaks, e.g. around Christmas time. This is because billing is usually directly taken care of by the cloud provider on an hourly or, in some cases, annual basis.

- Procuring and billing for BYOL licences, on the other hand, is done once in advance (on a “perpetual” basis) for a defined usage period of one, three or five years, which is why this option is recommended for longer-term, constant demand.

So far, so good. A number of studies have however shown that costs are among the biggest challenges in adapting to public cloud services. And in fact, in commercial terms, covering the costs of a third-party next-generation firewall alone is not enough, because each selected deployment model can also bring with it additional time- and volume-dependent expenses, either per hour or GB, for the use of a public-cloud infrastructure. This can at times have a significant impact on the total costs.

Security in the public cloud: “shared responsibility”

Why then, with providers advertising the high security level of their cloud, do you still need your own next-generation firewall in the first place? The answer in itself does not contradict the providers’ claims – but we do need to take into account the “shared responsibility model”: Depending on what’s on offer, the service of a public-cloud provider may include only the infrastructure itself (IaaS), a virtual platform (PaaS) or everything all the way to the software (SaaS). In each setting, the customer is left with the risk responsibility for the overlying areas, where attacks can also take place.

Outsourcing corporate IT to a public cloud is therefore by no means an automatic solution: In terms of security, users must fill this “gap” themselves – one which, depending on the service model, may range from protecting their data to securing their network, containers, applications and the configuration of the platform itself.

Public, private or multi-cloud – deployment models vary greatly

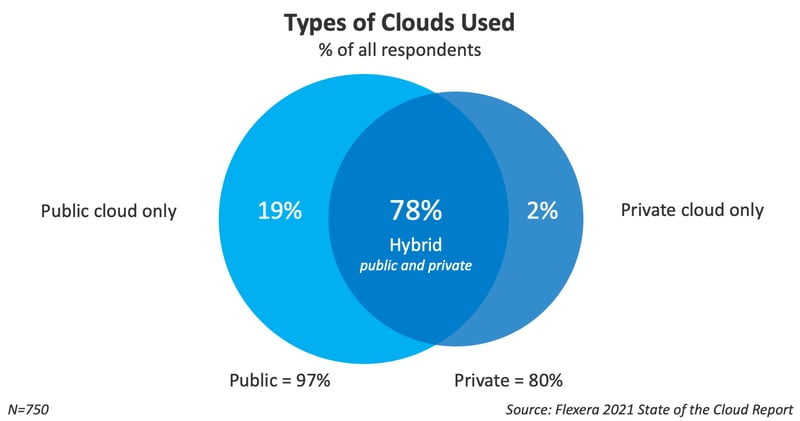

Today, there’s a noticeable trend towards hybrid cloud architectures – perhaps not least because of the recent experience accumulated by users. This keen interest is also highlighted in the current “Flexera 2021 State of the Cloud Report” (https://info.flexera.com/CM-REPORT-State-of-the-Cloud). The survey conducted in October and November 2020 examines the existing and future strategies of 750 global decision-makers and users with regard to private, public and multi-cloud use, with an eye on the impact of the COVID-19 pandemic on corporate IT.

The report concludes that a large proportion of respondents are already embracing hybrid cloud models. In addition, it shows that users have opted to migrate some applications back to their private cloud while leaving others in the public cloud, presumably due to application-specific advantages and disadvantages.

It can therefore be assumed that in the future, the requirements of applications will generally be closely examined in order to determine whether a public or a private cloud is better suited for mapping. In addition, the trend towards hybrid network structures is driven by edge computing – an explicitly hybrid computing approach in which data processing takes place at a decentralised level, i.e. where data is generated or collected.

Security strategies tailored to individual needs

With the increasing adaptation of hybrid cloud architectures, there is also a growing need for enterprises to increase visibility and implement security checkpoints between network transitions, application zones and responsibilities.

Agility, too, plays an important role – one that entails network segmentation. A frequent example for this is the separation of development and production environments, or for instance different organisational units creating and running applications in different virtual networks, or even in different clouds and data centres. Each of those scenarios requires both East-West and North-South communication to be monitored and secured. One method to do so are security service hubs: The data of virtual network segments and the connections outside the public cloud are routed through a “special” virtual network segment – a security services hub – so that specific next-generation firewall functions can be applied in order to filter the communication.

Conclusion: Security should be formulated from the very start

Transforming their IT architecture can confront companies with a whole host of challenges, as the (network) principles of their own “on-premise” architecture cannot be transferred to public cloud platforms at face value. From a security perspective, hybrid cloud transformations raise additional aspects, too.

As a result, companies should formulate and plan a uniform and integrated security architecture concept right from the outset. In other words, the existing architecture should be expanded to include firewalls within the cloud or adapted in a way that allows for a central view of security events and a consistent implementation of specifications and rules..

Deep integration is also a basic requirement for e.g. the automated deployment of an NG firewall – a topic that we will cover in our blog post entitled “Automation in the cloud with Terraform“!

Share your opinion with us!

Your perspective counts! Leave a comment on our blog article and let us know what you think.

.png?width=300&height=300&name=Design%20ohne%20Titel%20(7).png)