Since decoupling networking hardware from its software was identified as an enabler for the move from traditionally rigid, cost-intensive network components and related network functions to agile, flexible resource deployment, the virtualisation of network functions has become a prominent way to cope with the demands of both the near and distant future in the field of networking. The current approach of designing a network and its associated components is well-known under the label of Network Functions Virtualisation (NFV), introduced and specified by the European Telecommunications Standards Institute (ETSI). It is obvious that virtualisation itself is the key element in the deployment of the NFV concept in a practical networking context, whereby a network function is transformed into a Virtual Network Function (VNF) and is deployed in virtualised environments, primarily in cloud environments, on the basis of the ETSI specification.

The opportunity to access mature technologies for the virtualisation of computing resources leads to innovative approaches to redesigning networks and their components for future deployments. NFV offers highly automated mechanisms to provide access to network functions on demand.

Virtualisation technologies

In the literature, virtualisation is generally referred to in the context of the well-known and fairly mature technology of server virtualisation. This creates different instances on the server, sharing resources such as processor power or memory space, but which remain hidden from the applications within the instances. By sharing the same computing, storage and network resources, an abstraction layer enables areas to be isolated for every instance of abstraction. At times, the term abstraction layer is labelled differently and is interchangeable with the terms “virtualisation layer” or “software layer,” which describe the same subject matter. The deployment of virtualisation technology aims to increase efficiency and achieve cost-effective outcomes by benefiting from “hardware independence, isolation, secure user environments, and increased scalability” [RF], although these benefits may be offset due to reduced performance compared to traditional bare metal infrastructures. Combining new challenges with smart concepts and evolved techniques, a wide range of diverse virtualisation technologies is now available.

Virtualisation faces the inherent obstacle that the trade-off between performance and isolation needs to be controlled judiciously. Maximum isolation results in poorer performance, but as there is always an aspiration to maximise performance, creative strategies are needed so that the isolation of instances and the resulting security considerations are not neglected. On the whole, virtualisation techniques can be classified into two main categories, resulting in a division between Hypervisor-based and Non-Hypervisor-based techniques. In this tech blog, we focus on the basics of Hypervisor-based concepts.

Hypervisor-based Concepts

A hypervisor is a layer deployed on top of a single physical infrastructure. It enables an abstract view of the hardware, which allows the deployment of multiple operating systems by sharing the same hardware resources at the same time, appearing as though each operating system is using its own, physical computing resources.

The four levels of privilege

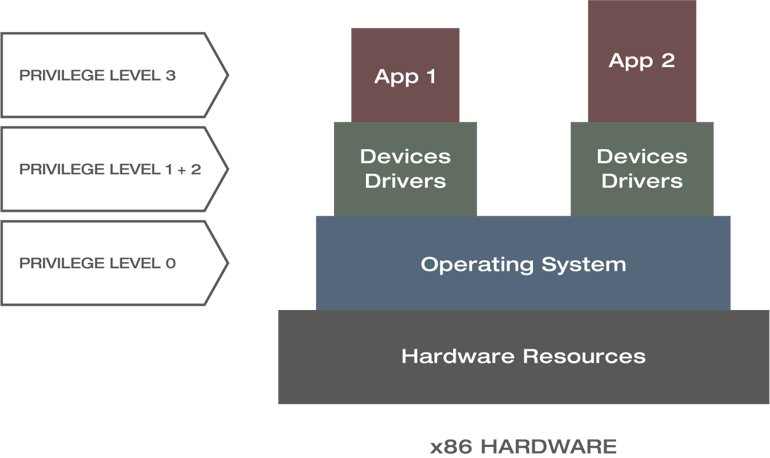

To deepen our understanding of both approaches, existing hierarchical relations and privilege levels of hardware resources, operating systems, device drivers and applications within computing architecture must all be considered. The figure below shows a basic modelling example applied to a bare metal x86 hardware architecture.

Privilege levels of an x86 architecture [CRH17]

The components of this architecture are mapped to four levels of privilege, starting with the top priority zero for the operating system and ending with the lowest priority for the applications with privilege level of three. Instead of privilege level, the literature often refers to rings of privileges as well, which is just a different schematic representation and an interchangeable vocabulary.

Full Virtualisation

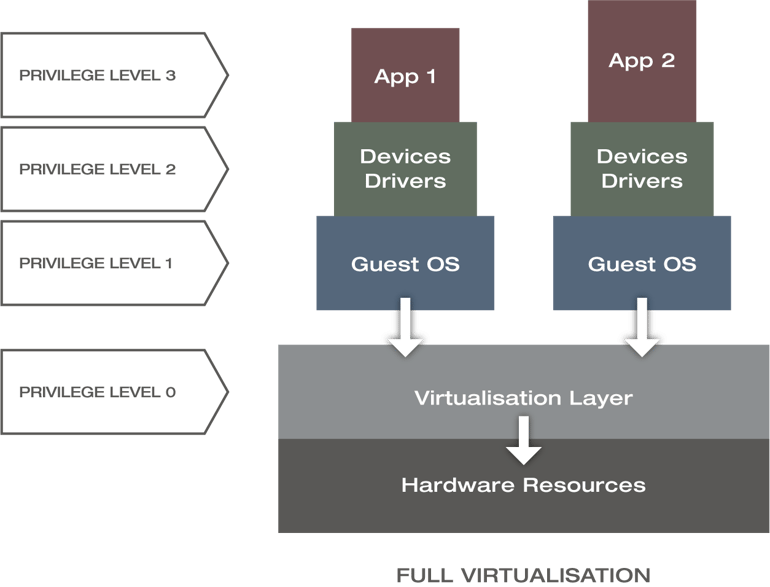

Full virtualisation completely decouples the hardware from the software by integrating a virtualisation layer on top of the hardware with a privilege level of zero and multiple guest Operating Systems (OSs) with a privilege level of one, as shown in the figure below.

Privilege levels of Full Virtualisation [CRH17]

The hypervisor, or rather the virtual machine manager, interacts directly with the hardware, giving each guest OS the same abilities as if it were operating directly with the underlying hardware. For instance, every memory access, input/output or interrupt operation is controlled by the hypervisor and ensures that the guest operating systems are unaware of each other. This model provides the best isolation and security at the cost of degraded performance. This original iteration of full virtualisation technology dates back to the late 1990s and has no longer any practical relevance.

Paravirtualisation

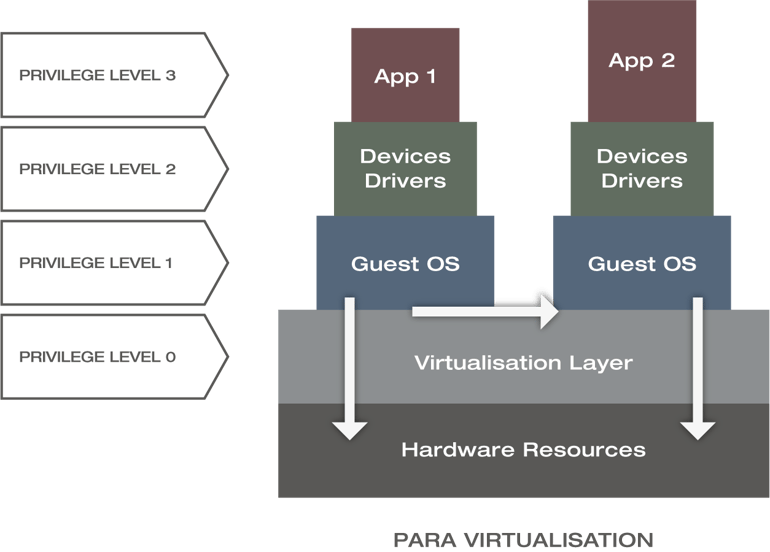

In order to mitigate performance losses, paravirtualisation enables time-critical functions to interact directly with hardware by introducing hypervcalls, which results in a slightly different privilege model (see the figure below). Hypervcalls are based on Application Programming Interfaces (APIs) provided by the hypervisor. The guest OS in each VM would then make API calls to use the hypervisor’s virtualisation features and interact directly with the hardware resources (see white arrows within the figure below).

Privilege level of Paravirtualisation [CRH17]

Unlike full virtualisation, guest OSs are aware of each other (see white horizontal arrow) and share common hardware resources, knowing that they operate with a hypervisor. As such, they are not fooled by emulated hardware resources. This requirement needs extra development effort so that guest OSs can work in a paravirtualised environment, with the benefit of improved performance. By adding additional complexity to the guest system in the form of the necessary modifications, the paravirtualised technique also necessitates increased and more complex maintenance effort. One somewhat well-known vendor that utilises paravirtualisation is XenServer.

Hardware-Assisted Virtualisation

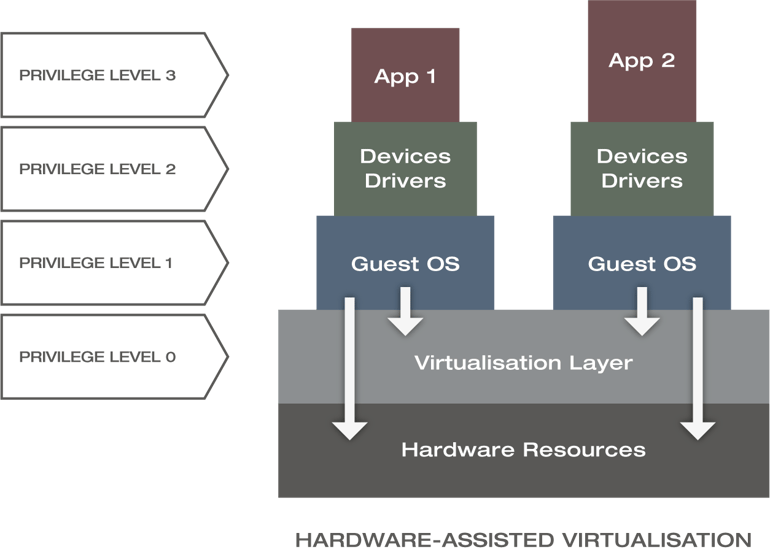

As full virtualisation comes with a significant system overhead, performance loss and limited support for the deployment of a large numbers of VMs, hardware-assisted virtualisation was introduced to overcome these issues. Hardware-assisted virtualisation is an enhancement of the full virtualisation technique. Consequently, it is still hypervisor-based with changes in the fine details of the privilege level model.

Privilege levels of Hardware-Assisted Virtualisation [CRH17]

The figure above shows that guest OSs gain direct access to the hardware with hardware-assisted virtualisation, resulting in better performance and throughput. Unlike paravirtualisation, hardware-assisted virtualisation reduces the maintenance overhead significantly and ideally eliminates the changes needed in the guest OSs. It is also considerably easier to obtain better performance compared to paravirtualisation because the host OS is not responsible for managing privileges and address space translation in this case. For example, Linux Kernel-based Virtual Machine (KVM)/Quick Emulator (Qemu) and VMware’s ESXi utilise the technique of hardware-assisted full virtualisation.

Virtual Machines (VMs)

Regardless of the preferred virtualisation design, hypervisor-based virtualisation generates encapsulated guest OS entities, known as Virtual Machines. Compared to bare-metal implementations, the deployment of VMs in a virtual environment offers reduced costs, easy scalability, fast backups, relatively secure and isolated environments, especially, high availability due to the possible existence of multiple copies of the same VM at the same time. Due to the nature of virtualisation, VMs experience a performance penalty.

Hypervisor Types

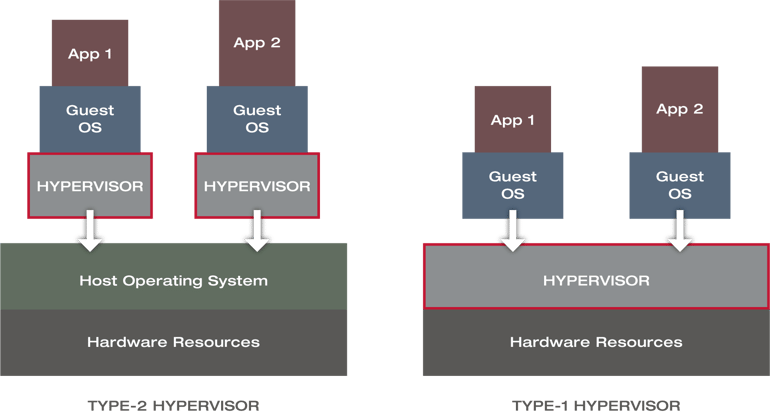

Virtual Machines are deployable with two types of hypervisors called type-1 and type-2 hypervisors. The type-1 hypervisor is the bare-metal version, running directly on the hardware and therefore benefiting from significantly higher performance and better isolation compared to the type-2 hypervisor. However, because of the missing host OS and the self-containment of the hypervisor, the deployment is much more complicated, as the hardware and hypervisor need to be tested for compatibility. VMWare ESXi, Microsoft Hyper-V and Xen are all based on a type-1 hypervisor concept.

Type-2 and Type-1 Hypervisors [CRH17]

Type-2 hypervisors run on top of a host OS, sharing resources with the guest OS and is more convenient and quicker to deploy than type-1 hypervisors. There is no specific hardware dependency; accordingly, it does not have to be tested against specific hardware, which lowers costs and deployment effort. KVM/Qemu, VMware Workstation or Oracle VirtualBox are based on a hypervisor type-2 concept (see figure above).

This tech blog is based on the Master Thesis „Analysis of Network Orchestration with competing Virtualisation Technologies“ of Marcel F., which was developed in cooperative effort between the University of Applied Sciences Cologne (Germany) and Xantaro. This work was supervised by Professor Andreas Grebe of the University of Applied Sciences Cologne and Solutions Architect Dr Hermann Grünsteudel of Xantaro.

[RF] – Ramalho F.; Neto, A.: Virtualization at the network edge: A performance comparison (2016 IEEE 17th International Symposium on A World of Wireless, Mobile and Multimedia Networks)

[CRH17] – Chayapathi R.; Hassan, S.F.; Shah, P.: Network Functions Virtualisation (NFV) with a touch of SDN, 2017.

Share your opinion with us!

Leave a comment using the form below and let us know what you think.

.png?width=300&height=300&name=Design%20ohne%20Titel%20(7).png)